Welcome to this issue of Digitalisation World, which includes a major focus on Smart Cities. As with so many of the digital transformation technologies and strategies currently being developed and/or implemented right across the globe, the jury is out as to what will, or won’t, be acceptable in the smart cities of the future. Clearly, intelligent automation has a massive role to play, but this inevitably brings with it concerns over security. And while the world continues merrily on its way despite the almost daily news announcements of various types and sizes of security breaches, the more critical the applications, the more critical security does become.

A bank’s customers lose some money, or lose access to their money, or have their financial details hacked – none of this is great news but, hopefully, no one dies as a result. Entrust a city’s utilities and transport infrastructure to automation, and security becomes a whole deal more critical – lives are at risk. That’s not a reason not to do it, but the risks and the rewards need to be evaluated extremely carefully before any decisions are made.

Alongside this growing security issue, we have the equally important consideration of…human beings. Just what will we, or won’t we, accept in terms of having our lives ordered, measured, monitored and, ultimately, governed, by technology – most noticeably, what many might see as the increasing privacy invasion which allows for very little human activity to go ‘under the radar’? From the moment an individual wakes up, and starts using water, heating, food supplies, through the journey to work (wherever that might be), time spent at work, lunch time, to the end of the day’s downtime, spent watching, listening, eating, playing sport, there’s every chance that all of these activities will be monitored and measured – ostensibly to provide valuable feedback to the supply industry, so that they can fine tune and improve our experiences.

Conspiracy theorists are having a field day in terms of focusing on the links (imagined and real) between some of the hyperscale technology providers and various governments around the world, but on a more mundane basis, do we want, or should we have, to endure a world where, say, your insurer is constantly adjusting your health premium based on the lifestyle you lead?

On the flip side, technology allows us to create our own world, where we can shut out huge amounts of potentially important information, by simply choosing not to view them – as in the case of choosing what news we receive. That’s not particularly healthy.

So, we arrive at the subject of ethics. Indeed, hardly a day passes when I don’t receive one or more press release focusing on the topic of AI and ethics. Call me cynical, but big business and ethics don’t tend to mix very well, so I’ve no great expectations as to the likely winner in the digital transformation ‘battle’ between what’s possible and what’s acceptable. So, a smart city as portrayed in Blade Runner might seem a long way off right now, but, if we haven’t wiped out the planet in the mean-time, it’s a definite possibility in the future!

A new survey has shown that 88% of companies believe ‘self-service’ will be the fastest growing channel in customer service by 2021.

The State of Native Customer Experience Report, revealed at Unbabel’s Customer Centric Conference 2019, details the opinions of senior executives surveyed at global companies (including several Fortune 500 organizations),regarding their worldwide multilingual customer support operations across the technology, retail, travel, finance, business services and entertainment sectors.

The survey was run by Execs In The Know, a global community of customer experience professionals,having been commissioned by multilingual support provider Unbabel.

The report reveals that ‘average speed of answer’ is no longer the gold standard by which customer support is measured. When asked which general factors had the highest impact on customer satisfaction, nearly all respondents (92%) rated “solving the customer’s problem” as having the most impact, followed by providing “knowledgeable support agents” (64%) with “speed of case resolution” (62%) only third most important.

In other words, first-time resolution — delivered by agents well-equipped to understand and address customer queries — has emerged as the key performance metric. More than 80% of companies surveyed stated that they were investing in chatbots to meet these growing levels of customer demand.

“In a highly competitive landscape, delivering high-quality support in a customers’ native language can be a major differentiator for businesses,” said Chad McDaniel, Execs In The Know President and Co-Founder. “With 76% of the respondents expecting live chat to increase and 70% expecting social media volumes to increase over the next two years, businesses need to ensure their native language strategy is effectively servicing all customers in all channels.”

The survey also provided a definitive ranking for the most expensive languages to cover in customer support. Japanese ranked first as the most expensive language, followed by German, French, and Chinese.

When asked, “What is your biggest and most painful challenge in terms of languages and customer service?” nearly half of survey respondents (47%) identified sourcing and agent retention as the primary pain point.

While talent retention and sourcing for high-demand customer service languages remains a priority for global organizations, technological advances in natural language processing and machine learning are paving the way for more intelligent virtual assistants that can accommodate changes and fluctuations in customer demand.

Unbabel CEO Vasco Pedro commented: “This research makes it clear that the channels where customer interactions take place are evolving along with technological and cultural shifts. In line with Unbabel’s roadmap, the vast majority of respondents expect self-service volumes to increase over the next two years, followed by live chat, social media platforms and text messaging. It makes sense: as primarily digital consumers establish more buying power, they are also demanding comprehensive digital support. Today, it’s more important than ever for businesses to adapt to a globalized economy and serve their customers quickly, cost-effectively, and above all in their native language.”

Business efficiency increases by two thirds when technology is implemented with a supporting culture and long-term digital vision.

Research from Oracle and the WHU - Otto Beisheim School of Management has shown business efficiency increases by two thirds when the right technology is implemented alongside seven key factors. According to the research, many organisations have invested in the right technologies, but are lacking the culture, skills or behaviours necessary to truly reap their benefits. The study found business efficiency only increases by a fifth when technology is implemented without the identified seven factors.

The seven key factors are: data-driven decision making, flexibility & embracing change, entrepreneurial culture, a shared digital vision, critical thinking & questioning, learning culture and open communication & collaboration.

The new research questioned 850 HR Directors as well as 5,600 employees on the ways organisations can adapt for a competitive advantage in the digital age. The study showed that achieving business efficiency is critical to becoming an agile organisation that can keep pace with change, with 42% of businesses reporting an overall increase in organisational performance once business efficiency was achieved.

“Pace of change has never been more important for organisations than it is in the current climate,” says Wilhelm Frost, from the Department for Industrial Organization and Microeconomics at WHU - Otto Beisheim School of Management. “Adaptability and agility are extremely important for organisations if they want to get ahead of the competition and offer market-leading propositions. Being adaptable means better support for customers, and needs to happen to meet their needs, but it’s also a big factor in any company attracting and retaining employees with the skills to drive them forward. Companies unprepared for the relentless pace of change will simply not be able to compete for skills in today’s digital marketplace.”

The research showed a third of business leaders worldwide don’t think they are currently operating in a way to attract – or compete for – talent. This went up to over half of business leaders in markets such as India, Brazil and Chile. While, a quarter of employees worldwide said they were worried about losing their jobs to machines.

“The study highlights the opportunity for HR to step up and lead workforce transformation by allowing the productivity benefits of technology to be realised,” said Joachim Skura, Strategy Director HCM Applications, Oracle. “Too many organisations are implementing technology but not properly integrating it in to the business. Human workers still fear it’s them versus the machines when, in fact, organisational growth will come from the two working together. With any technology implementation, there needs to be both a culture change and upskilling of staff to work with machines and technology. It’s these digital skills that make up the seven factors needed to realise the true benefits of any technology and become an adaptable business.”

Findings show there is an expectation gap between expected benefits of the public cloud and what it’s actually delivering for enterprises today.

Cohesity has published results of a global survey of 900 senior IT decision makers that shows a major expectation gap exists between what IT managers hoped the public cloud would deliver for their organisations and what has actually transpired.

More than 9 in 10 respondents across the UK believed when they started their journey to the cloud, it would simplify operations, increase agility, reduce costs and provide greater insight into their data. However, of those that felt the promise of public cloud hadn’t been realised, 95 percent believe it is because their data is greatly fragmented in and across public clouds and could become nearly impossible to manage long term. (Q7).

“While providing many needed benefits, the public cloud also greatly proliferates mass data fragmentation,” said Raj Rajamani, VP Products, Cohesity. “We believe this is a key reason why 38 percent of respondents say their IT teams are spending between 30-70 percent of their time managing data and apps in public cloud environments today.” (Q10)

There are several factors contributing to mass data fragmentation in the public cloud. First, many organisations have deployed multiple point products to manage fragmented data silos, but that can add significant management complexities. The survey, commissioned by Cohesity for Vanson Bourne, found that nearly half (42 percent) are using 3-4 point products to manage their data – specifically backups, archives, files, test/dev copies, - across public clouds today, while nearly a fifth (19 percent) are using as many as 5-6 separate solutions (Q4). Respondents expressed concerns about using multiple products to move data between on-premises and public cloud environments, if those products don’t integrate. 59 percent are concerned about security, 49 percent worry about costs and 44 percent are concerned about compliance. (Q23).

Additionally, data copies can increase fragmentation challenges. A third of respondents (33 percent) have four or more copies of the same data in public cloud environments, which can not only increase storage costs but create data compliance challenges.

"The public cloud can empower organisations to accelerate their digital transformation journey, but first organisations must solve mass data fragmentation challenges to reap the benefits,” continued Rajamani. “Businesses suffering from mass data fragmentation are finding data to be a burden, not a business driver.”

Disconnect between senior management and IT

IT leaders are also struggling to comply with mandates from senior business leaders within their organisation. Almost nine in ten (87 percent) respondents say that their IT teams have been given a mandate to move to the public cloud by senior management (Q24), However, nearly half of those respondents (42 percent) say they are struggling to come up with a strategy that effectively uses the public cloud to the complete benefit of the organisation.

“Nearly 80 percent of respondents stated their executive team believes it is the public cloud service provider's responsibility to protect any data stored in public cloud environments, which is fundamentally incorrect,” said Rajamani. “This shows executives are confusing the availability of data with its recoverability. It’s the organisation’s responsibility to protect its data.”

Eliminating fragmentation unlocks opportunities to realise the promise of the cloud

Despite these challenges, more than nine in ten (91 percent) believe that the public cloud service providers used by their organisation offer a valuable service. The vast majority (97 percent) expect that their organisation’s public cloud-based storage will increase by 94 percent on average between 2018 and the end of 2019.

Nearly nine in ten (88 percent) believe the promise of the public cloud can be better realised if solutions are in place that can help them solve mass data fragmentation challenges across their multi-cloud environments. (Q26). Respondents believe there are numerous benefits that can be achieved by tackling data fragmentation in public cloud environments, including: generating better insights through analytics / artificial intelligence (46 percent), improving the customer experience (46 percent), and maintaining or increasing brand reputation and trust by reducing risks of compliance breaches (43 percent). (q30).

“It’s time to close the expectation gap between the promise of the public cloud and what it can actually deliver to organisations around the globe,” said Rajamani. “Public cloud environments provide exceptional agility, scalability and opportunities to accelerate testing and development, but it is absolutely critical that organisations tackle mass data fragmentation if they want the expected benefits of cloud to come to life.”

The Internet of Things (IoT) is the emerging technology that offers the most immediate opportunities to generate new business and revenues, according to the Emerging Technology Community at CompTIA, the leading trade association for the global tech industry.

The community has released its second annual Top 10 Emerging Technologies list, ranked according to the near-term business and financial opportunities the solutions offer to IT channel firms and other companies working in the business of technology.

The Internet of Things also topped the community’s 2018 Top 10 list.

“Everybody in the technology world, as well as many consumers, is hearing the term Internet of Things,” said Frank Raimondi, a member of the CompTIA Emerging Technology Community leadership group who works in strategic channel and business development for Chargifi.

“To say it’s confusing and overwhelming is an understatement,” Raimondi continued. “IoT may mean many things to many people, but it can clearly mean incremental or new business to a channel partner if they start adding relevant IoT solutions with their existing and new customers. More importantly, they don’t have to start over from scratch.”

Artificial intelligence (AI) ranks second on the 2019 list.

“The largest impacts across all industries – from retail to healthcare, hospitality to finance – are felt when AI improves data security, decision-making speed and accuracy, and employee output and training,” said Maddy Martin, head of growth and education for Smith.ai and community vice chair.

“With more capable staff, better-qualified sales leads, more efficient issue resolution, and systems that feed actual data back in for future process and product improvements, companies employing AI technologies can use resources with far greater efficiency,” Martin added. “Best of all, as investment and competition increase in the AI realm, costs are reduced.”

Third on this year’s list of top emerging technologies is 5G wireless.

“The development and deployment of 5G is going to enable business impact at a level few technologies ever have, providing wireless at the speed and latency needed for complex solutions like driverless vehicles,” said Michael Haines, director of partner incentive strategy and program design for Microsoft and community chair.

“Additionally, once fully deployed geographically, 5G will help emerging markets realize the same ‘speed of business’ as their mature counterparts,” Haines commented. “Solution providers that develop 5G-based solutions for specific industry applications will have profitable, early-mover advantages.”

Also on the top 10 list is blockchain, coming in at number five this year.

“Blockchain came down crushing from its peak of hype cycle, and that’s probably for the best,” said Julia Moiseeva, founder of CLaaS (C-Level as a Service) Management Solutions Ltd. and member of the community’s leadership group. “Now that the luster of novelty and furor of the masses are gone, the dynamic of work around blockchain took a complete U-turn, again, for the best.”

“Now we observe players in this space building blockchain-based solutions in response to the real industry problems,” Moiseeva explained. “The trend of blockchain as a service (BaaS) is the one to watch. BaaS will be the enabler of significant revenue and cost-saving opportunities for cross-industry participants, especially those who don’t have the know-how or R&D to develop their own blockchain. We are moving toward plug-and-play product suites.”

Two new technologies, serverless computing and robotics, made the 2019 list, replacing automation and quantum computing.

Densify has published the findings of a global enterprise cloud survey of IT professionals.

The survey found that the top priorities for most organisations, when it comes to deploying workloads in the cloud, is all about the applications. They are focused on how to ensure applications perform well, how to keep the environments secure, and how to make sure they accomplish these goals within budget. With 55% of the respondents coming from enterprises with over 1,000 employees, these global organisations have concerns over how to ensure apps function well in the cloud. 66% of the organisations are running multi-cloud environments, with the clear majority winner being AWS (70% usage), followed by Azure (57% usage) and Google Cloud Platform at (31% usage). On-prem private cloud users were at 37%.

Container technology is rapidly being adopted to run applications and microservices, with 44% of the respondents already running containers, and another 24% looking into containers. Six months ago, when Densify conducted the last market survey, the percentage of people already running containers was at 19%, showing a strong growth in container adoption in just the last half year. As for which container platforms they run on, the top technology is from AWS with Amazon Elastic Container Service (ECS) and Amazon Elastic Container Services for Kubernetes (EKS), with 56% of the audience using one of these two. However, Kubernetes in general is the most popular being used on AWS, Azure, Google and IBM Cloud.

While enterprises have fully embraced and adopted the cloud and containers, there are some issues in the way people adopt the cloud, that can introduce risk to their businesses. A surprisingly large number of participants (40%) shared that they are not certain or up to speed with the latest cloud technologies from the cloud providers, or how to leverage them for their own success. When asked about how they decide and select the optimal cloud resources to run their applications, 55% reported “best guess” and “tribal knowledge” as their main strategy.

Summary of Key Findings:

o Container adoption is on the rise from 19% to 44% adoption in the last 6 months

o Managed Kubernetes services (e.g. Amazon’s EKS, Azure’s AKS, Google’s GKE) are the winning platforms (66%) when it comes to container management and orchestration, followed by Amazon Elastic Container Services (ECS).

o More than 55% are using best guess or tribal knowledge to specify container CPU and memory Request and Limit values, which is a major issue for running containers successfully

“The cloud is really complex, with hundreds of services from each cloud vendor, making the selection of the right services for business super difficult,” said Yama Habibzai, CMO, Densify. “It really is humanly impossible to align the workload and application demands to the right cloud resources, without automation and analysis.”

A primary objective of these enterprises is to control their spend with cloud, with this issue being their third most important objective based on the survey results. But the data shows that 45% of the audience indicated that they are spending more than they have budgeted, with 20% spending more than $1.2 million per year. Furthermore, 55% of the audience are using manual efforts to select their workloads, guessing on their selection, which directly drives up their cloud risk and spend.

When asked if automating the optimal cloud/container resource selection could help them with achieving their objectives, 80% responded favourably, that it can help them in reducing application risk, and driving down their cloud spend.

Securing the Internet of Things (IoT) is something which cannot be done with a one-size-fits-all approach – and every kind of connected object must be assessed individually, the Co-chair of Trusted Computing Group’s (TCG) Embedded Systems Work Group said recently.

Speaking at the Embedded Technologies Expo and Conference 2019, Steve Hanna highlighted how the growing trend for greater connectivity puts everyday objects at risk of exploitation and makes mission critical systems in businesses and Governments more vulnerable to attacks.

And while securing the IoT is often referred to as a singular movement, Hanna emphasized that every device had to be handled according to its individual needs, warning that there would be no single method that could be universally applied to safeguard devices.

“When you consider other security systems, for example a lock, what you would use for a front door is very different to what would be used for a bank or a Government building because the scale of an attack would be much greater and more complex in the case of the latter,” he said. “The same is true for computers and embedded systems; when we think about security, we have to think about different levels that correspond to the level of risk.”

Hanna illustrated his point by comparing a baby monitor with a chemical plant – both of which are likely to become connected as standard in the near-future. For the latter, he said, the impact of an attack could be as serious as an explosion which would ultimately endanger human life.

“While it is important to secure things like baby monitors, for example, to avoid the devices being used to eavesdrop on conversations, there is a price point that needs to be met as well – no one is going to spend thousands of dollars on a baby monitor and for the manufacturers, that means the security solution needs to be less expensive,” continued Hanna. “In the case of a chemical plant, the risk is much greater, the level of attack is likely to be more sophisticated and a serious amount of money could have been invested in carrying it out. As a result, the security measures need to be much more stringent.”

Hanna went on to explain that the customized security approach required by the Internet of Things can be easily achieved using technologies that are available today. TCG’s security standards are all based on the concept of Trusted Computing where a Root of Trust forms the foundation of the device and meets the specific requirements of the device or deployment.

“TCG’s wide variety of security options provide the building blocks to create secure systems,” said Hanna. “In the case of a chemical plant, industrial-grade discrete TPM hardware can be built in not just into the plant’s firewall but also into the control system. This will enable these systems to be monitored in real-time and for even sophisticated attacks to be identified and prevented. For devices which are less high-risk, TPM firmware can be created which has the same set of commands but is less rigorously secured and therefore more cost-effective. Finally, for very tiny devices that can’t afford TPM firmware, DICE offers a good alternative.”

Updated forecast projects growth at edge and precipitous talent drain.

Five years ago,Vertiv led a global, industry-wide examination of the data center of the future. Data Center 2025: Exploring the Possibilities, stretched the imaginations of more than 800 industry professionals and introduced a collaborative vision for the next-generation data center. Today, Vertiv released a mid-point update – Data Center 2025: Closer to the Edge – and it reveals fundamental shifts in the industry that barely registered in the forecasts from five short years ago.

The migration to the edge is changing the way today’s industry leaders think about the data center. They are grappling with a broad data center ecosystem comprised of many types of facilities and relying increasingly on the edge of the network. Of participants who have edge sites today or expect to have edge sites in 2025, more than half (53%) expect the number of edge sites they support to grow by at least 100%, with 20% expecting a 400% or more increase. Collectively, survey participants expect their total number of edge computing sites will grow 226% between now and 2025.

During the original 2014 research, the edge was acknowledged as a growing trend but merited just four mentions in the 19-page report. The industry’s attention at that point was focused firmly on hybrid architectures leveraging enterprise, cloud and colocation resources. Even in an industry that routinely moves and changes at light speed, the growth of the edge and the dramatic impact it will have on the data center is staggering.

“In just five short years, we have seen the emergence of an entirely new segment of the ecosystem, driven by the need to locate computing closer to the user,” said Rob Johnson, Vertiv CEO. “This new distributed network is reliant on a mission-critical edge that has fundamentally changed the way we think about the data center.”

“Making predictions about technology shifts more than two or three years ahead is challenging, but this research aligns with the vision of an ever-changing and incredibly dynamic market which is unfolding in front of our eyes,” said Giordano Albertazzi, president for Vertiv in Europe, Middle East and Africa. “Specifically, the estimates for future growth in edge computing are consistent with the predicted growth in AI, IoT and other latency and bandwidth dependent applications. The challenge – especially given the shortage in data center personnel – will be managing all of that new infrastructure effectively and efficiently. Remote management and approaches such as lights-out data centers will play an increasingly important role.”

More than 800 data center professionals participated in the survey. Among the other notable results:

Zero trust technologies that enable seamless, secure user authentication are critical; 6 in 10 employees are disrupted, irritated, frustrated and waste time on passwords.

MobileIron has revealed the results of a survey conducted with IDG, which found that enterprise users and security professionals alike are frustrated by the inefficiency and lax security of passwords for user authentication. With 90% of security professionals reporting to have seen unauthorized access attempts as a result of stolen credentials, it’s clear that that the future of security requires a next generation of authentication that’s more secure.

Mobile devices are the best option for replacing passwords, as they remain at the centre of enterprises in terms of where business is done, how access is given, and how authentication is done. In fact, the survey revealed that almost nine-in-ten (88%) security leaders believe that mobile devices will soon serve as digital ID to access enterprise services and data.

The survey, sponsored by MobileIron, polled 200 IT security leaders in the US, UK, Australia, and New Zealand working in a range of industries at companies with at least 500 employees. The aim was to discover the major authentication pain points facing enterprises.

“It’s time to say goodbye to passwords. They not only cause major frustrations for users and IT professionals, but they also pose major security risks,” said Rhonda Shantz, Chief Marketing Officer at MobileIron. “That’s why MobileIron is ushering in a new era of user authentication with a mobile ID and zero sign-on experience from any device, any OS, any location, to any service. With more and more users accessing apps and company data via their own mobile devices, it’s not only easier to leverage mobile devices than passwords for user authentication – it’s also much more secure.”

The perils of passwords:

The merits of mobile:

Okta research shows workers are ready to go passwordless this year.

Okta has debuted The Passwordless Future Report, which demonstrates how passwords negatively impact the security of organisations and mental health of employees. The research, which surveyed 4,000+ workers across the UK, France and the Netherlands, also found that there is a readiness for passwordless security methods such as biometrics, with 70% workers believing biometrics would benefit the workplace.

Dr. Maria Bada, Research Associate, Cambridge University said, ‘’Okta’s research clearly showed that employees can experience negative emotions and stress due to forgetting a password and that can impact not only their career but also their emotional health. And this is not due to forgetting a password but due to using an insecure method to remember passwords. Biometric technology can be promising in creating a passwordless future, but it's essential to create an environment of trust, while ensuring privacy and personal data protection.’’

Passwords are the ideal targets for cyber crime

The majority of hacking-based breaches are a result of reused, stolen or weak passwords. Okta’s research found that in total, 78% of respondents use an insecure method to help them remember their password and this rises to 86% among 18-34 year olds. Some of these memory aids include:

Dr. Bada said, “Passwords are often quite revealing. They are created on the spot, so users might choose something that is readily to mind or something with emotional significance. Passwords tap into things that are just below the surface of consciousness. Criminals take advantage of this and with a little research they can easily guess a password.”

Passwords impact mental health in the workplace

Anxiety is on the rise in the workplace due to several factors, but security is one that has flown under the radar. The Passwordless Future Report found that 62% of respondents feel stressed or annoyed as a result of forgetting their password. This was highest in the UK (69%), compared with France (65%) and the Netherlands (53%). The average worker must remember a total of 10 passwords in everyday life which evokes negative emotions in two-thirds of respondents (63%).

Dr. Bada said, “The potential impact from forgetting a password can cause extreme levels of stress, which over time can lead to breakdown or burnout. That is due to our brains being sensitive to perceived threats. Being constantly focused on potential threats online causes us to become hypersensitive to stress. In the long term that can cause mental health problems.”

The future is passwordless

By combining methods such as biometrics and machine learning with strong authentication, organisations can remove inadequate gateways like passwords altogether.

A staggering 70% of respondents feel there are advantages to using biometric technology in the workplace. This is the highest in France (78%) and with 18-34 year olds across all regions (81%). Almost one-third (32%) feel that biometric technology could make their day-to-day life easier or reduce their stress and anxiety levels in the workplace. However, 86% of respondents have some reservations about sharing biometrics with their employers, demonstrating that workers are ready for the ease of use, but do not trust organisations to protect their data.

Todd McKinnon, CEO and co-founder of Okta concluded, “At Okta, we believe deeply in the potential for technology, and that for organisations of all sizes and industries attempting to become technology companies, trust is the new frontier. Today, businesses need to adopt technology that enables them to innovate quickly, while prioritising the security, privacy, and consent controls that help them to be trusted. Passwords have failed us as an authentication factor, and enterprises need to move beyond our reliance on this ineffective method. In 2019, we will see the first wave of organisations going completely passwordless and Okta’s customers will be at the forefront.”

New EfficientIP report, in partnership with IDC, shows 34% increase in attacks.

EfficientIP, a leading specialist in DNS security for service continuity, user protection and data confidentiality, has published the results of its 2019 Global DNS Threat Report, sponsored research conducted by market intelligence firm IDC.

Over the past year, organizations faced on average more than nine DNS attacks, an increase of 34%. Costs too went up 49%, meaning one in five businesses lost over $1 million per attack and causing app downtime for 63% of those attacked. Other issues highlighted by the study, now in its fifth year, include the broad range and changing popularity of attack types, ranging from volumetric to low signal, including phishing, 47%, malware-based attacks, 39%, and old-school DDoS, 30%.

Also highlighted were the greater consequences of not securing the DNS network layer against all possible attacks. No sector was spared, leaving organizations open to a range of advanced effects from compromised brand reputation to losing business.

Romain Fouchereau, Research Manager European Security at IDC, says “With an average cost of $1m per attack, and a constant rise in frequency, organisations just cannot afford to ignore DNS security and need to implement it as an integral part of the strategic functional area of their security posture to protect their data and services.”

DNS is a central network foundation which enables users to reach all the apps they use for their daily work. Most network traffic first goes through a DNS resolution process, whether this is legitimate or malicious network activity. Any impact on DNS performance has major business implications. Well-publicized cyber attacks such as WannaCry and NotPetya caused financial and reputational damage to organizations across the world. The impact caused by DNS-based attacks is as important due to its mission-critical role.

The top impacts of DNS attacks - damaged reputation, business continuity and finances

Three-in-five, 63%, of organizations suffered application downtime, 45% had their websites compromised, and one-quarter, 27%, experienced business downtime as a direct consequence. These could all potentially lead to serious NISD (Network and Information Security Directive) penalties. In addition, one-quarter, 26%, of businesses had lost brand equity due to DNS attacks.

Data theft via DNS continues to be a problem. To protect against this, organizations are prioritizing securing network endpoints, 32%, and looking for better DNS traffic monitoring, 29%.

David Williamson, CEO of EfficientIP summarized the research “While these figures are the worst we have seen in five years of research, the good news is that the importance of DNS is at last being widely recognized by businesses. Mainstream organizations are now starting to leverage DNS as a key part of their security strategy to help with threat intelligence, policy control and automation, thus building a good foundation for their zero trust plan."

New data from Synergy Research Group shows that just 20 metro areas account for 56% of worldwide retail colocation revenues. Ranked by revenue generated in Q1 2019, the top five metros are Tokyo, New York, London, Washington and Shanghai, which in aggregate account for 25% of the worldwide market.

The next 15 largest metro markets account for another 31% of the market. Those top 20 metros include eight in North America, seven in the APAC region, four in EMEA and one in Latin America. In Q1 Equinix was the retail colocation market leader by revenue in 14 of the top 20 metros, with NTT being the only other operator to lead in more than one of the top metros. In wholesale colocation there is a somewhat different mix and ranking of metros, but the market is even more concentrated with the top 20 metros accounting for 71% of worldwide revenue. North America features more heavily in wholesale and accounts for eleven of the top 20 metros. Digital Realty is the leader in eight of the top 20 wholesale markets and Global Switch the leader in three others. Other colocation operators that feature heavily in the top 20 metros include 21Vianet, @Tokyo, China Telecom, CoreSite, CyrusOne, Interxion, KDDI, SingTel and QTS.

Over the last twelve quarters the top 20 metro share of the worldwide retail colocation market has been relatively constant at around the 55-56% mark, despite a push to expand data center footprints and to build out more edge locations. Among the top 20 metros, those with the highest retail colocation growth rates (measured in local currencies) are Sao Paulo, Sydney, Beijing, Shanghai and Frankfurt, all of which had a rolling annualized growth rate of over 15%. While the US didn’t feature among the highest growth metros for retail colocation, on the wholesale side both Washington/Northern Virginia and Silicon Valley are growing at double-digit rates.

“We continue to see robust demand for colocation across the board, with the standout regional growth numbers coming from APAC and the highest segment-level growth coming from colocation services for hyperscale operators,” said John Dinsdale, a Chief Analyst and Research Director at Synergy Research Group. “It is particularly noteworthy that the market remains concentrated around the most important economic hubs, reflecting the importance of proximity to major customers. Hyperscale operators often focus their own large data center builds away from the major metros, in areas where real estate prices and operating costs are much lower, so they too will increasingly rely on colocation providers to help target clients in key metros. The large metros will maintain their share of the colocation market over the coming years.”

One of the features of the rising force that is managed services, is how many other IT organisations want to be its friend. Latest, and by no means new-comers to the party are the distributors. As they move quickly from being mere product suppliers, many are recognising that they have a changed role that requires them to adapt to the supply of services, which also affects their contract conditions.

At the industry’s leading regional distributor forum, the European Summit of the GTDC (Global Technology Distribution Council) which globally represents over $150bn of annual sales, they talked about how they are working with providers of managed services. And acknowledged that there are still some areas of the relationship to work through.

Tim Henneveld of distributor TIM wants the process of change to happen faster: “There are always things that can be done better. Among the challenges that we see is one that has always been there - that sometimes things take too long to get done or to get changed.”

The challenge coming out of the MSP business is also working out how to integrate MSP programmes with vendors’ legacy programs for the channel, he says. “So usually there is a channel model and then there's an MSP model and these businesses are growing together. And this has to be reflected from our perspective and in the channels with the vendors.”

A second challenge is how cloud services are resold by the vendors through distributors. This covers licensing and financing but that's a small part of it, says Tim Henneveld. It's more about the contract. “So we see challenges in risk allocation. We always have an issue that contracts for cloud services that means using a certain court in a certain country and a code for regulation and there are issues of a limitation of liability offered in other countries.”

“That risk discussion and who takes what risk in the reselling of cloud is something that is today from our opinion not solved,” he concluded.

Eric Nowak, President Arrow ECS EMEA: “Predictability means both sides have their own responsibilities and this calls for consistency. This is a long-term partnership that we have as distributors,”

And then there is how distributors market themselves to the channels including MSPs, as Miriam Murphy, SVP of Tech Data says: “It is now much more of a solutions-sell environment. The really important thing as we build that out is that our vendor partners are very open to collaborating with each other in activities that we do. They should be open to investing in marketing funding and enablement funding to support multi-vendor solutions and also in solutions that involve services.”

Which is all good news for MSPs, though in such a fast-changing industry, they need to be on top of trends and new offerings, especially those coming through distribution. The next snapshot of the industry will be revealed at the London Managed Services & Hosting Summit 2019 on 18th September (https://mshsummit.com/), and at the similar Manchester event on 30 October (https://mshsummit.com/north/).

The Managed Services & Hosting Summits are firmly established as the leading Managed Services event for the channel and feature conference session presentations by major industry speakers and a range of sessions exploring both technical and sales/business issues.

Now in its ninth year, the London Managed Services & Hosting Summit 2019 aims to provide insights into how managed services continues to grow and change as customer demands expand suppliers into a strategic advisory role, and the pressures for compliance and resilience impact the business model at a time of limited resources. Managed Service Providers, other channels and their suppliers can evolve new business models and relationships but are looking for advice and support as well as strategic business guidance.

Reflecting the transformational nature of the enterprise technology world which it serves, this year’s 10th edition of Angel Business Communications’ premier IT awards has a new name. The SVC Awards have become... the SDC Awards!

10 years ago, SVC stood for Storage, Virtualisation and Channel – and the SVC Awards focused on these important pillars of the overall IT industry. Fast forward to 2019, and virtualisation has given way to software-defined, which, in turn, has become an important sub-set of digital transformation. Storage remains important, and the Cloud has emerged as a major new approach to the creation and supply of IT products and services. Hence the decision to change one small letter in our awards; but, in doing so, we believe that we’ve created a set of awards that are of much bigger significance to the IT industry.

The SDC (Storage, Digitalisation + Cloud) Awards – the new name for Angel Business Communications’ IT awards, which are now firmly focused on recognising and rewarding success in the products and services that are the foundation for digital transformation!

Categories

The SDC Awards 2019 feature a number of categories, providing a wide range of options for organisations and individuals involved in the IT industry to participate.

Our editorial staff will only validate entries ensuring they have met the entry criteria outlined for each category. We will then announce the ‘shortlist’ to be voted on by the readers of the Digitalisation World stable of titles. Voting takes place in October and November. The selection of winners is based solely on the public votes received. The winners will be announced at a gala evening event at London’s Millennium Gloucester Hotel on 27 November 2019.

Vendor Channel Program of the Year

Managed Services Provider Innovation of the Year

IT Systems Distributor of the Year

IT Systems Reseller/Managed Services Provider of the Year

If you’d like to enter your company for one or more category, you can do so at:

Robotic process automation (RPA) software revenue grew 63.1% in 2018 to $846 million, making it the fastest-growing segment of the global enterprise software market, according to Gartner, Inc. Gartner expects RPA software revenue to reach $1.3 billion in 2019.

“The RPA market has grown since our last forecast, driven by digital business demands as organizations look for ‘straight-through’ processing,” said Fabrizio Biscotti, research vice president at Gartner. “Competition is intense, with nine of the top 10 vendors changing market share position in 2018.”

The top-five RPA vendors controlled 47% of the market in 2018. The vendors ranked sixth and seventh achieved triple-digit revenue growth (see Table 1). “This makes the top-five ranking appear largely unsettled,” Mr. Biscotti added.

Table 1: RPA Software Market Share by Revenue, Worldwide (Millions of Dollars)

| 2017 Rank | 2018 Rank | Company | 2017 Revenue | 2018 Revenue | 2017-2018 Growth (%) | 2018 Market Share (%) |

| 5 | 1 | UiPath | 15.7 | 114.8 | 629.5 | 13.6 |

| 1 | 2 | Automation Anywhere | 74.0 | 108.4 | 46.5 | 12.8 |

| 3 | 3 | Blue Prism | 34.6 | 71.0 | 105.0 | 8.4 |

| 2 | 4 | NICE | 36.0 | 61.5 | 70.6 | 7.3 |

| 4 | 5 | Pegasystems | 28.9 | 41.0 | 41.9 | 4.8 |

| 8 | 6 | Kofax | 10.4 | 37.0 | 256.6 | 4.4 |

| 11 | 7 | NTT-AT | 4.9 | 28.5 | 480.9 | 3.4 |

| 6 | 8 | EdgeVerve Systems | 15.7 | 20.5 | 30.1 | 2.4 |

| 7 | 9 | OpenConnect | 15.2 | 16.0 | 5.3 | 1.9 |

| 9 | 10 | HelpSystems | 10.2 | 13.7 | 34.3 | 1.6 |

|

|

| Others | 273.0 | 333.8 | 22.2 | 39.4 |

|

|

| Total | 518.8 | 846.2 | 63.1 | 100.0 |

Due to rounding, numbers may not add up precisely to the totals shown

Source: Gartner (June 2019)

North America continued to dominate the RPA software market, with a 51% share in 2018, but its share dropped by 2 percentage points year over year. Western Europe held the No. 2 position, with a 23% share. Japan came third, with adoption growth of 124% in 2018. “This shows that RPA software is appealing to organizations across the world, due to its quicker deployment cycle times, compared with other options such as business process management platforms and business process outsourcing,” said Mr. Biscotti.

Digital Transformation Efforts Drive RPA Adoption

Although RPA software can be found in all industries, the biggest adopters are banks, insurance companies, telcos and utility companies. These organizations traditionally have many legacy systems and choose RPA solutions to ensure integration functionality. “The ability to integrate legacy systems is the key driver for RPA projects. By using this technology, organizations can quickly accelerate their digital transformation initiatives, while unlocking the value associated with past technology investments,” said Mr. Biscotti.

Gartner expects the RPA software market to look very different three years from now. Large software companies, such as IBM, Microsoft and SAP, are partnering with or acquiring RPA software providers, which means they are increasing the awareness and traction of RPA software in their sizable customer bases. At the same time, new vendors are seizing the opportunity to adapt traditional RPA capabilities for digital business demands, such as event stream processing and real-time analytics.

“This is an exciting time for RPA vendors,” said Mr. Biscotti. “However, the current top players will face increasing competition, as new entrants will continue to enter a market whose fast evolution is blurring the lines distinguishing RPA from other automation technologies, such as optical character recognition and artificial intelligence.”

The future of the database market is in the Cloud

By 2022, 75% of all databases will be deployed or migrated to a cloud platform, with only 5% ever considered for repatriation to on-premises, according to Gartner, Inc. This trend will largely be due to databases used for analytics, and the SaaS model.

“According to inquiries with Gartner clients, organizations are developing and deploying new applications in the cloud and moving existing assets at an increasing rate, and we believe this will continue to increase,” said Donald Feinberg, distinguished research vice president at Gartner. “We also believe this begins with systems for data management solutions for analytics (DMSA) use cases — such as data warehousing, data lakes and other use cases where data is used for analytics, artificial intelligence (AI) and machine learning (ML). Increasingly, operational systems are also moving to the cloud, especially with conversion to the SaaS application model.”

Gartner research shows that 2018 worldwide database management system (DBMS) revenue grew 18.4% to $46 billion. Cloud DBMS revenue accounts for 68% of that 18.4% growth — and Microsoft and Amazon Web Services (AWS) account for 75.5% of the total market growth. This trend reinforces that cloud service provider (CSP) infrastructures and the services that run on them are becoming the new data management platform.

Ecosystems are forming around CSPs that both integrate services within a single CSP and provide early steps toward intercloud data management. This is in distinct contrast to the on-premises approach, where individual products often serve multiple roles but rarely offer their own built-in capabilities to support integration with adjacent products within the on-premises deployment environment. While there is some growth in on-premises systems, this growth is rarely from new on-premises deployments; it is generally due to price increases and forced upgrades undertaken to avoid risk.

“Ultimately what this shows is that the prominence of the CSP infrastructure, its native offerings, and the third-party offerings that run on them is assured,” said Mr. Feinberg. “A recent Gartner cloud adoption survey showed that of those on the public cloud, 81% were using more than one CSP. The cloud ecosystem is expanding beyond the scope of a single CSP — to multiple CSPs — for most cloud consumers.”

Leveraging the automation continuum is security and risk management leaders’ latest imperative in creating and preserving value at their organization, according to Gartner, Inc.

Katell Thielemann, research vice president at Gartner, explained recently to an audience of more than 3,500 security and risk management professionals at the Gartner Security and Risk Management Summit, that the automation continuum emerging in the security and risk landscape is one where new mindsets, practices and technologies are converging to unlock new capabilities. Using automation in areas of identity, data, and new products and services development were identified as three critical areas for the security and risk enterprise.

“We are no longer asking the singular question of how we’re managing risk and providing security to our organization. We’re now being asked how we’re helping the enterprise realize more value while assessing and managing risk, security and even safety. The best way to bring value to your organizations today is to leverage automation,” said Ms. Thielemann.

Automation is All Around Us

Automation is already all around us — and it is starting to impact the security and risk world in two ways:

“Automation follows a continuum of sophistication and complexity, and can use a number of techniques, either stand-alone or in combination,” said David Mahdi, senior research director at Gartner. “For example, robotic process automation currently works best in task-centric environments, but process automation is evolving to increasingly powerful bots, and eventually to autonomous process orchestration.”

By 2021, 17% of the average organization’s revenue will be devoted to digital business initiatives, and by 2022, content creators will produce more than 30% of their digital content with the aid of AI content-generation techniques.

“What this means to security and risk management professionals is that our organizations are likely building solutions and making technology-related choices often without realizing the risk implications of what they are doing,” said Mr. Mahdi.

Balancing Emerging Technologies and People

“Automation is just the beginning. Emerging technologies will change everything and impact security and risk directly,” said Beth Schumaecker, director, advisory at Gartner. “Our reliance on data is ever increasing, yet it poses one of the largest privacy risks to organizations. In the next two years, half of large industrial companies will use some emerging form of digital twins, which will also need to be secured.”

The demands of these emerging technologies and digital transformation introduce new talent challenges for the security function, altering how organizations expect security to be delivered.

“Digital transformation demands that security staff play a wider range of roles, from strategic consultants to threat profilers to product managers, which in turn require new skills and competencies,” said Ms. Schumaecker. “It’s already impossible to fill all our existing vacancies.”

Mission-Critical Areas in Automation

The three mission-critical areas in today’s enterprises are automation in identity, data and new products or services development:

Interest in blockchain continues to be high, but there is still a significant gap between the hype and market reality. Only 11% of CIOs indicated they have deployed or are in short-term planning with blockchain, according to the Gartner, Inc. 2019 CIO Agenda Survey of more than 3,000 CIOs. This may be because the majority of projects fail to get beyond the initial experimentation phase.

“Blockchain is currently sliding down toward the Trough of Disillusionment in Gartner’s latest ‘Hype Cycle for Emerging Technologies,’” said Adrian Leow, senior research director at Gartner. “The blockchain platforms and technologies market is still nascent and there is no industry consensus on key components such as product concept, feature set and core application requirements. We do not expect that there will be a single dominant platform within the next five years.”

To successfully conduct a blockchain project, it is necessary to understand the root causes for failure. Gartner has identified the seven most common mistakes in blockchain projects and how to avoid them.

No. 1: Misunderstanding or Misusing Blockchain Technology

Gartner has found that the majority of blockchain projects are solely used for recording data on blockchain platforms via decentralized ledger technology (DLT), ignoring key features such as decentralized consensus, tokenization or smart contracts.

“DLT is a component of blockchain, not the whole blockchain. The fact that organizations are so infrequently using the complete set of blockchain features prompts the question of whether they even need blockchain,” Mr. Leow said. “It is fine to start with DLT, but the priority for CIOs should be to clarify the use cases for blockchain as a whole and move into projects that also utilize other blockchain components.”

No. 2: Assuming the Technology Is Ready for Production Use

The blockchain platform market is huge and largely composed of fragmented offerings that try to differentiate themselves in various ways. Some focus on confidentiality, some on tokenization, others on universal computing. Most are too immature for large-scale production work that comes with the accompanying and requisite systems, security and network management services.

However, this will change within the next few years. CIOs should monitor the evolving capabilities of blockchain platforms and align their blockchain project timeline accordingly.

No. 3: Confusing a Protocol With a Business Solution

Blockchain is a foundation-level technology that can be used in a variety of industries and scenarios, ranging from supply chain over management to medical information systems. It is not a complete application as it must also include features such as user interface, business logic, data persistence and interoperability mechanisms.

“When it comes to blockchain, there is the implicit assumption that the foundation-level technology is not far removed from a complete application solution. This is not the case. It helps to view blockchain as a protocol to perform a certain task within a full application. No one would assume a protocol can be the sole base for a whole e-commerce system or a social network,” Mr. Leow added.

No. 4: Viewing Blockchain Purely as a Database or Storage Mechanism

Blockchain technology was designed to provide an authoritative, immutable, trusted record of events arising out of a dynamic collection of untrusted parties. This design model comes at the price of database management capabilities.

In its current form, blockchain technology does not implement the full “create, read update, delete” model that is found in conventional database management technology. Instead, only “create” and “read” are supported. “CIOs should assess the data management requirement of their blockchain project. A conventional data management solution might be the better option in some cases,” Mr. Leow said.

No. 5: Assuming That Interoperability Standards Exist

While some vendors of blockchain technology platforms talk about interoperability with other blockchains, it is difficult to envision interoperability when most platforms and their underlying protocols are still being designed or developed.

Organizations should view vendor discussions regarding interoperability as a marketing strategy. It is supposed to benefit the supplier’s competitive standing but will not necessarily deliver benefits to the end-user organization. “Never select a blockchain platform with the expectation that it will interoperate with next year’s technology from a different vendor,” said Mr. Leow.

No. 6: Assuming Smart Contract Technology Is a Solved Problem

Smart contracts are perhaps the most powerful aspect of blockchain-enabling technologies. They add dynamic behavior to transactions. Conceptually, smart contracts can be understood as stored procedures that are associated with specific transaction records. But unlike a stored procedure in a centralized system, smart contracts are executed by all nodes in the peer-to-peer network, resulting in challenges in scalability and manageability that haven’t been fully addressed yet.

Smart contract technology will still undergo significant changes. CIOs should not plan for full adoption yet but run small experiments first. This area of blockchain will continue to mature over the next two or three years.

No. 7: Ignoring Governance Issues

While governance issues in private or permissioned blockchains will usually be handled by the owner of the blockchain, the situation is different with public blockchains.

“Governance in public blockchains such as Ethereum and Bitcoin is mostly aimed at technical issues. Human behaviors or motivation are rarely addressed. CIOs must be aware of the risk that blockchain governance issues might pose for the success of their project. Especially larger organizations should think about joining or forming consortia to help define governance models for the public blockchain,” Mr. Leow concluded.

The number of devices connected to the Internet, including the machines, sensors, and cameras that make up the Internet of Things (IoT), continues to grow at a steady pace. A new forecast from International Data Corporation (IDC) estimates that there will be 41.6 billion connected IoT devices, or "things," generating 79.4 zettabytes (ZB) of data in 2025.

As the number of connected IoT devices grows, the amount of data generated by these devices will also grow. Some of this data is small and bursty, indicating a single metric of a machine's health, while large amounts of data can be generated by video surveillance cameras using computer vision to analyze crowds of people, for example. There is an obvious direct relationship between all the "things" and the data these things create. IDC projects that the amount of data created by these connected IoT devices will see a compound annual growth rate (CAGR) of 28.7% over the 2018-2025 forecast period. Most of the data is being generated by video surveillance applications, but other categories such as industrial and medical will increasingly generate more data over time.

"As the market continues to mature, IoT increasingly becomes the fabric enabling the exchange of information from 'things', people, and processes. Data becomes the common denominator – as it is captured, processed, and used from the nearest and farthest edges of the network to create value for industries, governments, and individuals' lives," said Carrie MacGillivray, group vice president, IoT, 5G and Mobility at IDC. "Understanding the amount of data created from the myriad of connected devices allows organizations and vendors to build solutions that can scale in this accelerating data-driven IoT market."

"Mankind is on a quest to digitize the world and a growing global DataSphere is the result. The world around us is becoming more 'sensorized,' bringing new levels of intelligence and order to personal and seemingly random environments, and Internet of Things devices are an integral part of this process," said David Reinsel, senior vice president, IDC's Global DataSphere. "However, with every new connection comes a responsibility to navigate and manage new security vulnerabilities and privacy concerns. Companies must address these data hazards as they advance new levels of efficiency and customer experience."

While it's not surprising to see industrial and automotive equipment represent the largest opportunity of connected "things," IDC expects to see strong adoption of household (e.g., smart home) and wearable devices in the near term. Over the longer term, however, with public safety concerns, decreasing camera costs, and higher bandwidth options available (including the deployment of 5G networks offering low latency, dense coverage, and high bandwidth), video surveillance will grow in adoption at a rapid rate. Drones, while still early in adoption today, show great potential to access remote or hard to reach locations and will also be a big driver of data creation using cameras.

While the video surveillance category will drive a large share of the IoT data created, the industrial and automotive category will see the fastest data growth rates over the forecast period with a CAGR of 60%. This is the result of the increasing number of "things" (other than video surveillance cameras) that are capturing data continuously as well as more advanced sensors capturing more (and richer) metrics or machine functions. This rich data includes audio, image, and video. And, where analytics and artificial intelligence are magnifying data creation beyond just the data capture, data per device is growing at a faster pace than data per video surveillance camera.

It should also be noted that the IoT metadata category is a growing source of data to be managed and leveraged. IoT metadata is essentially all the data that is created about other IoT data files. While not having a direct operational or informational function in a specific data category (like industrial or video surveillance), metadata provides the information about the data files captured or created by the IoT device. Metadata, compared with original source files like a video image, is very small, sometimes by orders of magnitude. In other cases, however, metadata can mimic the size of the source file, such as in manufacturing environment. In all cases, metadata is valuable data that can be leveraged to inform intelligent systems, drive personalization, or bring context to seemingly random scenarios or data sets. In other words, metadata is a prime candidate to be fed into NoSQL databases like MongoDB to bring structure to unstructured content or fed into cognitive systems to bring new levels of understanding, intelligence, and order to outwardly random environments.

There isn't enough computing capability today to process the amount of data being created and stored. A new study from International Data Corporation (IDC) finds that the processing and transformation required to convert the data into useful and valuable insights for today's organizations and a new class of workloads must scale faster than Moore's law ever predicted.

To address this gap, the computing industry is taking a new path that leverages alternative computing architectures like DSPs, GPUs, FPGAs for acceleration and offloading of computing tasks in order to limit the tax on the general-purpose architecture in the system. These other architectures have been key to the enablement of artificial intelligence, including the growing use of deep learning models. At the edge; DSPs, FPGAs, and optimized architecture blocks in SoCs have been more suitable in initial inference applications for robotics, drones, wearables, and other consumer devices like voice-assisted speakers.

The IDC study, How Much Compute Is in the World and What It Can/Can't Do? (IDC # US44034119), is part of IDC's emerging Global DataSphere program, which sizes and forecasts data creation, capture, and replication across 70 categories of content-creating things — including IoT devices. The data is then categorized into the types of data being created to understand various trends in data usage, consumption, and storage.

The study builds on over twenty years of extensive work in the embedded and computing areas of research at IDC, including leveraging an embedded market model covering about 300 system markets and the key underlying technologies that enable the value of a system. The study analyzes the shift in the computing paradigm as artificial intelligence (AI) moves from the datacenter to the edge and endpoint, expanding the choices of computing architectures for each system market as features and optimizations are mapped closer to workloads.

For decades, advancements in process technology, silicon design, and the industry's dedication to Moore's Law predicted the performance gains of microprocessors and transistor functionality and integration in system on chips (SoCs). These advancements have been instrumental in establishing the cadence of growth and scale of client computing, smartphones, and cloud infrastructure.

Microprocessors have been at the very core of computing and today Intel, AMD, and ARM are the bellweather for the cadence of computing. However, the story does not end there; we are at the beginning of a large market force as AI becomes more ubiquitous across a broad base of industries and drives intelligence and Inferencing to the edge.

"AI technology will continue to play a critical role in redefining how computing must be implemented in order to meet the growing diversity of devices and applications," said Mario Morales, program vice president for Enabling Technologies and Semiconductors at IDC. "Vendors are at the start of their business transformation and what they need from their partners is no longer just products and technology. To address the IoT and endpoint opportunity, performance must always find a balance with power and efficiency. Moving forward, vendors and users will require roadmaps and not just chips. This is a fundamental change for technology suppliers in the computing market and only those who adapt will remain relevant."

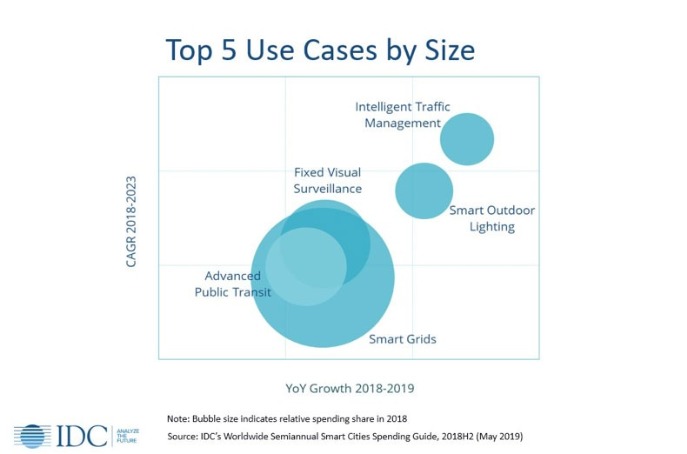

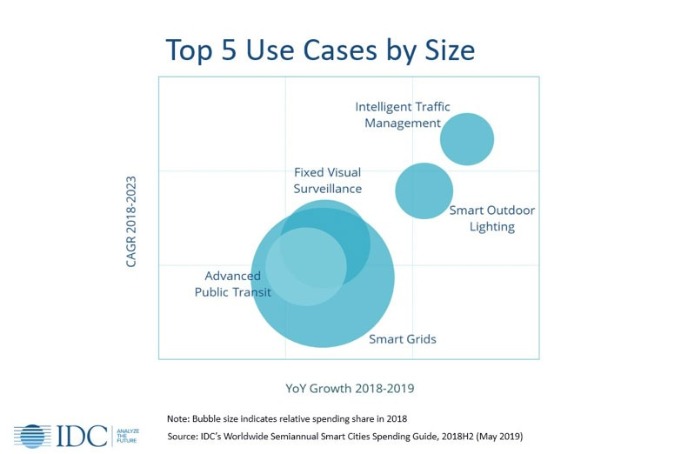

A new forecast from the International Data Corporation (IDC) Worldwide Semiannual Smart Cities Spending Guide shows global spending on smart cities initiatives will reach $189.5 billion in 2023. The top priorities for these initiatives will be resilient energy and infrastructure projects followed by data-driven public safety and intelligent transportation. Together, these priority areas will account for more than half of all smart cities spending throughout the 2019-2023 forecast.

"In the latest release of IDC's Worldwide Smart Cities Spending Guide, we expanded the scope of our research to include smart ecosystems, added detail for digital evidence management and smart grids for electricity and gas, and expanded our cities dataset to include over 180 named cities," said Serena Da Rold, program manager in IDC's Customer Insights & Analysis group. "Although smart grid and smart meter investments still represent a large share of spending within smart cities, we see much stronger growth in other areas, related to intelligent transportation and data-driven public safety, as well as platform-related use cases and digital twin, which are increasingly implemented at the core of smart cities projects globally."

The use cases that will experience the most spending over the forecast period are closely aligned with the leading strategic priorities: smart grid, fixed visual surveillance, advanced public transportation, smart outdoor lighting, and intelligent traffic management. These five use cases will account for more than half of all smart cities spending in 2019, although their share will decline somewhat by 2023. The use cases that will see the fastest spending growth over the five-year forecast are vehicle-to-everything (V2X) connectivity, digital twin, and officer wearables.

Singapore will remain the top investor in smart cities initiatives, driven by the Virtual Singapore project. New York City will have the second largest spending total this year, followed by Tokyo and London. Beijing and Shanghai were essentially tied for the number 5 position and spending in all these cities is expected to surpass the $1 billion mark in 2020.

On a regional basis, the United States, Western Europe, and China will account for more than 70% of all smart cities spending throughout the forecast. Japan and the Middle East and Africa (MEA) will experience the fastest growth in smart cities spending with CAGRs of around 21%.

"We are excited to present our continued expansion of this deep dive into the investment priorities of buyers in the urban ecosystem, with more cities added to our database of smart city spending and new forecasts that show the expanded view of smart cities, such as Smart Stadiums and Smart Campuses," said Ruthbea Yesner, vice president of IDC Government Insights and Smart Cities programs. "As our research shows, there is steady growth across the globe in the 34 use cases we have sized and forecast."

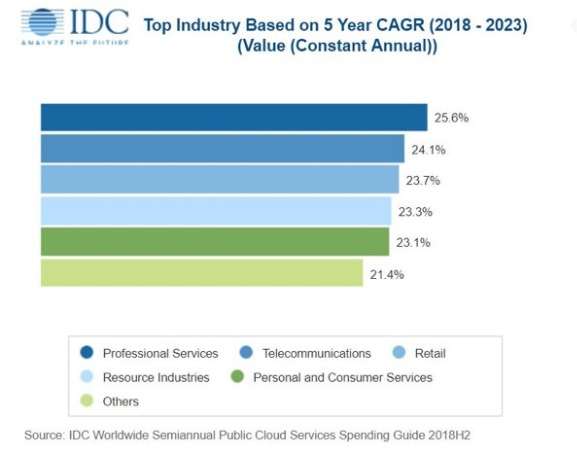

Public Cloud Services spending to more than double by 2023

Worldwide spending on public cloud services and infrastructure will more than double over the 2019-2023 forecast period, according to the latest update to the International Data Corporation (IDC) Worldwide Semiannual Public Cloud Services Spending Guide. With a five-year compound annual growth rate (CAGR) of 22.3%, public cloud spending will grow from $229 billion in 2019 to nearly $500 billion in 2023.

"Adoption of public (shared) cloud services continues to grow rapidly as enterprises, especially in professional services, telecommunications, and retail, continue to shift from traditional application software to software as a service (SaaS) and from traditional infrastructure to infrastructure as a service (IaaS) to empower customer experience and operational-led digital transformation (DX) initiatives," said Eileen Smith, program director, Customer Insights and Analysis.

Software as a Service (SaaS) will be the largest category of cloud computing, capturing more than half of all public cloud spending in throughout the forecast. SaaS spending, which is comprised of applications and system infrastructure software (SIS), will be dominated by applications purchases. The leading SaaS applications will be customer relationship management (CRM) and enterprise resource management (ERM). SIS spending will be led by purchases of security software and system and service management software.

Infrastructure as a Service (IaaS) will be the second largest category of public cloud spending throughout the forecast, followed by Platform as a Service (PaaS). IaaS spending, comprised of servers and storage devices, will also be the fastest growing category of cloud spending with a five-year CAGR of 32.0%. PaaS spending will grow nearly as fast (29.9% CAGR) led by purchases of data management software, application platforms, and integration and orchestration middleware.

Three industries – professional services, discrete manufacturing, and banking – will account for more than one third of all public cloud services spending throughout the forecast. While SaaS will be the leading category of investment for all industries, IaaS will see its share of spending increase significantly for industries that are building data and compute intensive services. For example, IaaS spending will represent more than 40% of public cloud services spending by the professional services industry in 2023 compared to less than 30% for most other industries. Professional services will also see the fastest growth in public cloud spending with a five-year CAGR of 25.6%.

On a geographic basis, the United States will be the largest public cloud services market, accounting for more than half the worldwide total through 2023. Western Europe will be the second largest market with nearly 20% of the worldwide total. China will experience the fastest growth in public cloud services spending over the five-year forecast period with a 49.1% CAGR. Latin America will also deliver strong public cloud spending growth with a 38.3% CAGR.

Very large businesses (more than 1000 employees) will account for more than half of all public cloud spending throughout the forecast, while medium-size businesses (100-499 employees) will deliver around 16% of the worldwide total. Small businesses (10-99 employees) will trail large businesses (500-999 employees) by a few percentage points while the spending share from small offices (1-9 employees) will be in the low single digits. All the company size categories except for very large businesses will experience spending growth greater than the overall market.

Spending on customer experience (CX) was reported at $97 billion in 2018 and is expected to increase to $128 billion by 2022, growing at a healthy 7% five-year CAGR, according to the International Data Corporation (IDC) Worldwide Semiannual Customer Experience Spending Guide. The European industries spending the most on CX in 2019 will be banking, retail, and discrete manufacturing. Together, these verticals will absorb 33% of the European CX spend this year. Retail will also have the fastest growing spend on CX throughout 2022, outgrowing banking by 2021.

Customer care and support, digital marketing, and order fulfillment are the use cases with the highest spending in CX today and will continue to be a strong investment area throughout 2022. Investing in CX represents a clear opportunity for industries to differentiate, implementing these use cases to mold a public brand perception around the customer, improving websites, social media interactions, and product and service promotions. Looking at long-term opportunities, omni-channel content will be the fastest growing CX use case by 2022, with European companies focusing on this space to build organizational experience delivery competency, leveraging investments in content and experience design, to lower the cost of supporting new channels and ensure brand consistency. Omni-channel content reflects the core foundation of the future of CX through the optimization of content across channels at every point in the customer journey, creating a non-linear experience around the user.

Emerging technologies (such as AI, IoT, and ARVR) and hyper-micro personalization are fueling investments in CX together with rising customer expectations, intensified competition, ever-changing customer behaviors, and stronger demand for personalization. The innovations in CX are about centering the experience of a product or service around the user, approaching each customer as an individual in real time and moving away from segment-based approaches to customer engagement.

"Customer Experience is the top business priority for European companies in 2019," said Andrea Minonne, senior research analyst, IDC Customer Insight & Analysis in Europe. "Businesses are moving from traditional ways of reaching out to customers and are embracing more digitized and personalized approaches to delivering empathy where the focus is on constantly learning from customers. As a customer-facing industry, retail spend on CX is moving fast as retailers have fully understood how important it is to embed CX in their business strategy."

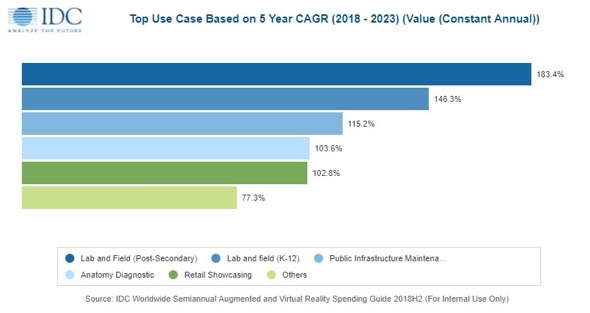

Worldwide spending on augmented reality and virtual reality (AR/VR) is forecast to reach $160 billion in 2023, up significantly from the $16.8 billion forecast for 2019. According to the International Data Corporation (IDC) Worldwide Semiannual Augmented and Virtual Reality Spending Guide, the five-year compound annual growth rate (CAGR) for AR/VR spending will be 78.3%.

IDC expects much of the growth in AR/VR spending will be driven by accelerating investments from the commercial and public sectors. The strongest spending growth over the 2019-2023 forecast period will come from the financial (133.9% CAGR) and infrastructure (122.8% CAGR) sectors, while the manufacturing and public sectors will follow closely. In comparison, consumer spending on AR/VR is expected to deliver a five-year CAGR of 52.2%.